Background¶

These notes are an introduction to Discrete Variable Representations (DVRs) using an example that has particularly general applicability. The Finite Element Method with a Discrete Variable Representation (FEM-DVR) provides a way to solve the time-independent or time-dependent Schrödinger equation that, like all DVR methods, is more accurate and faster than finite difference. This method is one of a family of Discrete Variable Representations that are in common use today in chemistry and physics. It can be applied to problems with any potential on any interval. These notes explain the FEM-DVR method using Gauss-Lobatto quadrature, and also outline the Crank-Nicolson propagator for solving the time-dependent Schrödinger equation.

Introduction¶

Here we describe a method for solving the Schrödinger equation for a particle moving in one dimension with coordinate \(x\) for any potential \(V (x)\) on any interval of \(x\). The variational method of course provides a way to do so, but its application generally poses a practical problem we would like to overcome: If we expand the unknown wave function in \(H |\Psi\rangle = E |\Psi\rangle\) in a finite set of basis functions

substitute it into the Schrödinger equation, and project from the left with \(\langle \varphi_m |\), we come quickly to the familiar matrix representation

that we can also get from the variational theorem. This is a variational basis representation of the Schrödinger equation.

To construct this matrix eigenvalue problem we need the matrix elements of both the kinetic energy and potential energy operators \(\hat{T}\) and \(\hat{V}\). If we choose a basis for which the kinetic energy matrix elements are easy to evaluate, energy operators T and then try to apply it to solving this problem for various potentials, we generally find that the matrix elements are difficult to perform for many of those potential functions. The DVR is a way of getting around this problem. It is described in an extensive literature on many kinds of DVR that began in the 1980s with seminal work by John Light in the Chemistry Department at the University of Chicago and his coworkers [1]. The central idea is this: We choose a particular form of basis functions, no matter what the potential, that are constructed based on a Gaussian quadrature. Then we use that Gaussian quadrature to do every integral in the problem of both the kinetic and potential energy operators. The result is that the potential energy matrix is diagonal

where \(x_n\) is a Gauss quadrature point. In other words, in this basis set method the potential energy matrix is always diagonal, and there are no potential energy integrals to be evaluated. We only have to evaluate the potential on a grid of quadrature points in \(x\). So if we can evaluate the potential energy function as a function of the coordinates, we can reduce the Schrödinger equation directly to a matrix eigenvalue equation. To see how this works we have first to familiarize ourselves with the basics of Gaussian quadrature for performing integrals in general.

Gassian Quadrature¶

A Gaussian quadrature is a set of points and weights for approximating an integral as a sum. The most basic example is the Gauss-Legendre quadrature summarized together with many other Gauss quadratures in Abramowitz and Stegun [2] (the NBS Handbook of Mathematical Functions) starting on page 887, or in the successor to reference [2], in NIST Digital Library of Mathematical Functions https://dlmf.nist.gov/ chapter 3, section 3.5 on Gauss quadrature (see Eqs. 3.5.15 and 3.5.19)

the points, \(x_i\) , are the zeros of the nth order Legendre polynomial \(P_n(x)\), and the weights, \(w_i\) are given by formulas involving the derivative of that polynomial, in this case, \(w_i = 2/ ((1 - x_i )^2 [P_n (x_i)]^2)\). The error is proportional to the \(2n^{th}\) derivative of the integrand. That means that this quadrature is exact if \(f(x)\) is any polynomial of degree \(2n - 1\) or lower. This property is at the heart of Gauss quadrature and the DVR method. The quadrature can be scaled onto any finite interval

by scaling the points and weights. The Legendre polynomials do not have zeros at the endpoints of the interval [-1, 1], so all the quadrature points at any order are interior to that interval. For our DVR we need to have points in the quadrature at the endpoints of the interval, because that is where we have to apply the boundary conditions of the Schrödinger equation. That quadrature is called the Gauss-Lobatto quadrature [2],

which can of course be written in the form of Eq. (1) with the same scaling. The only difference is that before the scaling the first and last points are explicitly \(-1\) and \(+1\), with the weights \(\frac{2}{n(n -1)}\). There are algorithms for computing the remaining Gauss- Lobatto points and weights at any order, and those are available in C, C++, Fortran and Python libraries. Fixing the two endpoints to be quadrature points lowers the accuracy of the quadrature. It now quadratures polynomials of degree up to \(2n-3\) exactly. We will use the Gauss-Lobatto quadrature here, but there are many other Gauss quadratures. In general they quadrature integrals of the form

with some positive definite weight function \(W(x)\). For example if \(a = -\infty\) and \(b = \infty\), with the weight function \(W = e^{-x^2}\), the quadrature is based on Hermite polynomials and is called Gauss-Hermite quadrature. See Abramowitz and Stegun [2] for a summary of eight common quadratures. Of course, there is a Wikipedia page too.

DISCRETE VARIABLE REPRESENTATION OF THE WAVE FUNCTION AND HAMILTONIAN¶

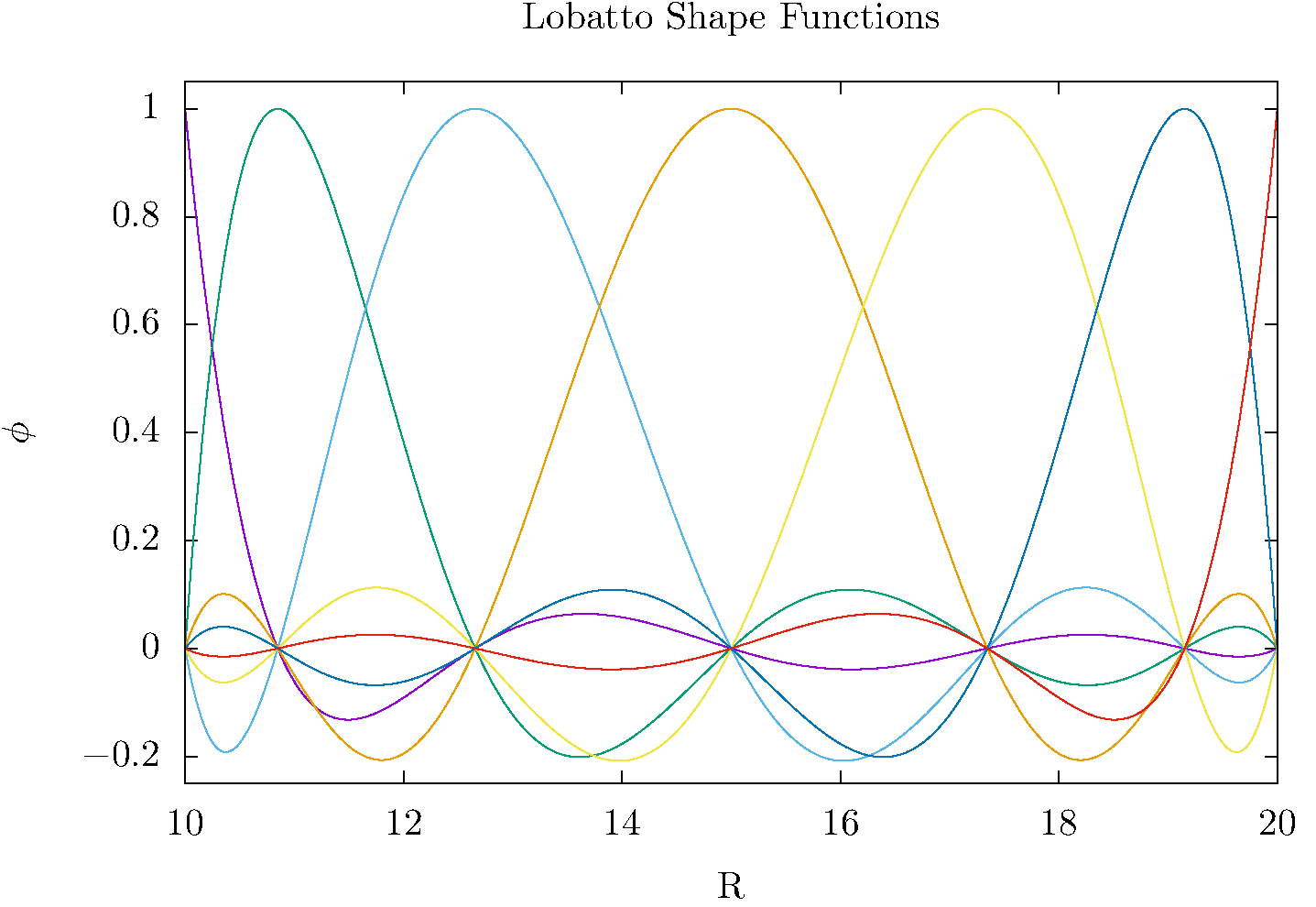

The Gauss-Lobatto quadrature points provide a way to construct \(n\) polynomials of degree \(n - 1\) called “interpolating polynomials” that are each zero at all but one of the quadrature points,

and are equal to 1 at \(x = x_j\) . So if we have the values of a function at the quadrature points we can interpolate it by effectively fitting it with Nth order polynomials as

this fit reproduces the function exactly at the quadrature points, and provides a polynomial interpolation between them. This kind of interpolation, using interpolating poynomials of the form of Eq. (2), the starting idea of the DVR and also the start of the derivation of quadrature rules like Simpson’s rule that involve evenly (or unevenly) spaced quadrature points. To form our DVR basis functions, \(\phi_j(x)\), we know normalize these interpolating polynomials by multiplying by \(1/\sqrt{w_j}\),

These functions are now normalized in the very specific sense that they are orthonormal within the quadrature approximation

- The central idea of a DVR using the Gauss-Lobatto quadrature is to

Expand our unknown wave function in these Gauss-Lobatto basis functions and then

Define every matrix element as its approximation by the underlying Gauss-Lobatto quadrature

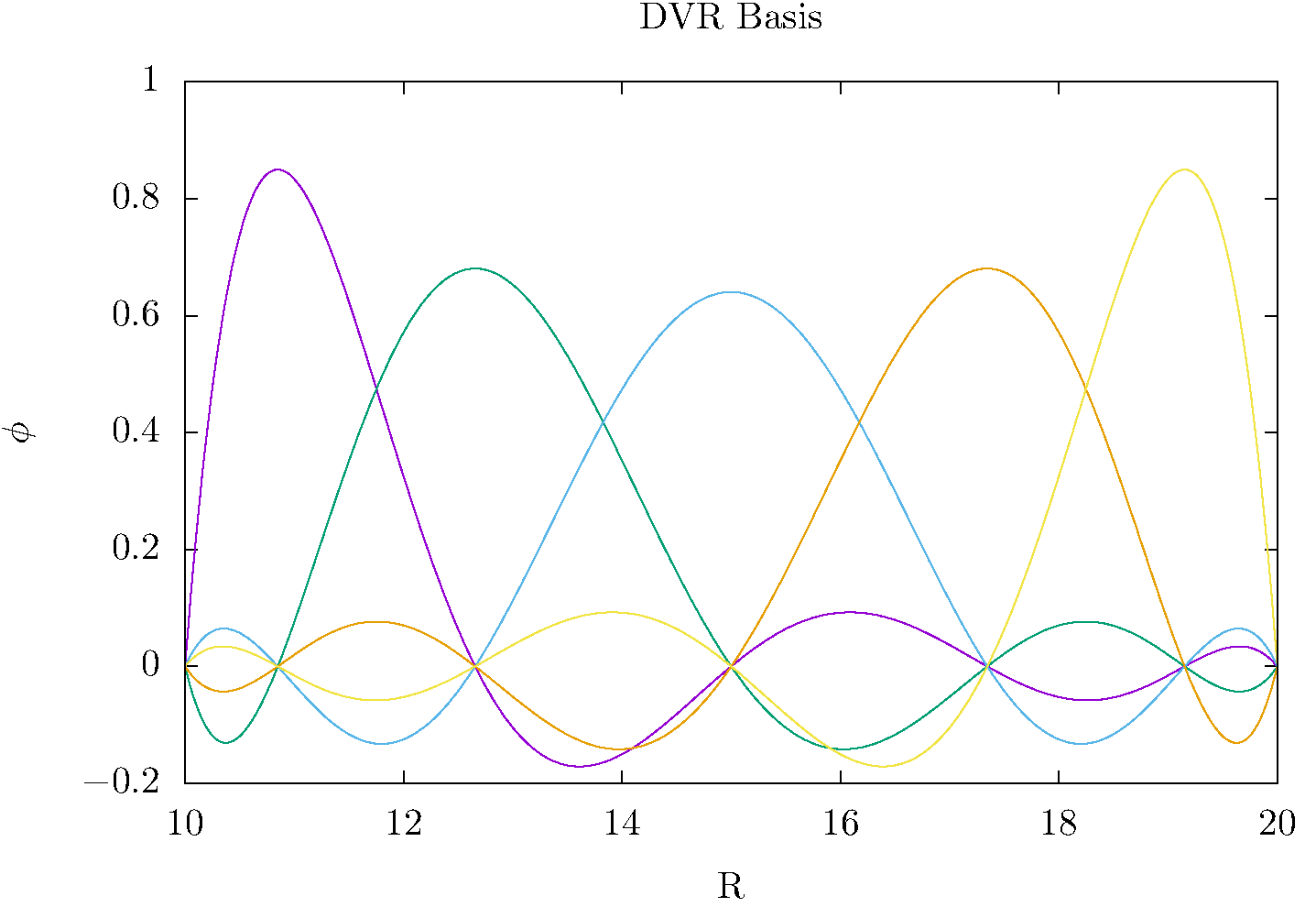

The idea of using the Gauss-Lobatto quadrature to define a DVR was introduced by Manolopoulos and Wyatt [3, 4] in 1989. It allows us to apply boundary conditions at the two endponts, a and b of the interval on which the quadrature is defined. We will apply the boundary conditions that the wave function is zero at the endpoints, which we can accomplish by simply excluding from the basis the basis functions that are nonzero at first and last of the quadrature points. We expand the wave function in the DVR basis

where the unknown coefficients are related to the values of the wave function \(\Psi(x)\) at the grid points by \(\psi_m = \Psi(x_m)w_m^{1/2}\). Then the matrix of the kinetic energy is

where we integrated by parts and use the boundary condition we are applying to get an obviously symmetric matrix result. We can see that the integrand in Eq. (4) is of order \(2(n - 2) = 2n - 4\). That’s because the order of the interpolating polynomials in Eq. (2) is \(n-1\), and so their derivatives are of order \(n-2\). Thus the Gauss-Lobatto quadrature, which integrates polynomials of order \(2n-3\), is exact for the kinetic energy.

For the potential energy we have

The potential matrix is diagonal because the DVR basis functions are at all the quadrature points but one. That has to be the same point for the two basis functions, or else the integral is zero. With this DVR, the time-independent Schrödinger equation has been reduced to a matrix equation

The kinetic energy matrix is full and is treated exactly. The potential energy matrix elements are approximated by the quadrature. To improve the approximation we increase the order of the quadrature. We only require the values of the potential at the quadrature points, and once we have the coefficients we can scale them so that the function to be normalized.

DVRs are a popular numerical approach in one or more dimensions. The sort of DVR described here can be used for the angular degrees of freedom in spherical polar coordinates, for example, with the change of variable x = cos . To increase the accuracy of the DVR basis set representation of the wave function one only needs to increase the order of the underlying quadrature.

For large intervals in x and wave functions with short wavelengths, we might need many hundreds or thousands of grid points. In this approach, that would mean calculating the points and weights to high precision for a quadrature that might of the order of thousands. To escape that problem, there is a popular variant of this method called the FEM-DVR.

FINITE-ELEMENT METHOD WITH DISCRETE VARIABLE REPRESENTATION¶

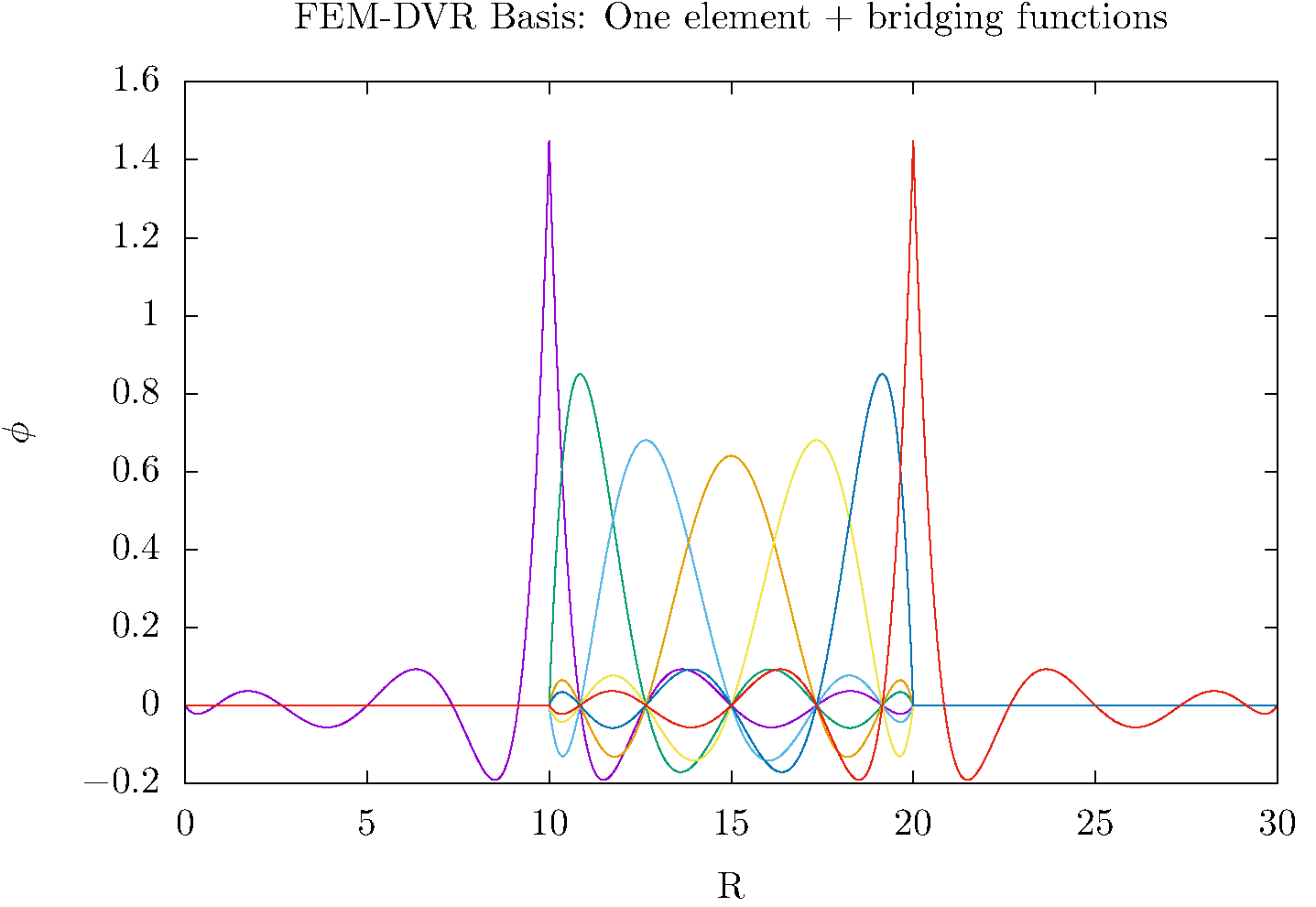

In this method, first proposed by Rescigno and McCurdy [5] in 2000, we divide up the interval in x into sub-intervals call finite elements. With each of those elements we associate a separate Gauss-Lobatto quadrature and a separate DVR basis constructed according to Eq. (3). However, we must pay special attention to the boundaries between the elements. To allow the wave function to be continuous across the finite element boundaries we must include the Lobatto shape functions that we eliminated above, and that are nonzero at the ends of the finite element interval. To do so, we start by labeling the Lobatto-shape functions according to which interval, \(\alpha\), they are associated with

and similarly labeling the DVR basis functions

We can then create bridging functions that connect the elements \(\alpha\) and \(\alpha+1\) according to

The bridging functions are normalized, because they are integrated by the combination of the quadratures in the two finite elements.

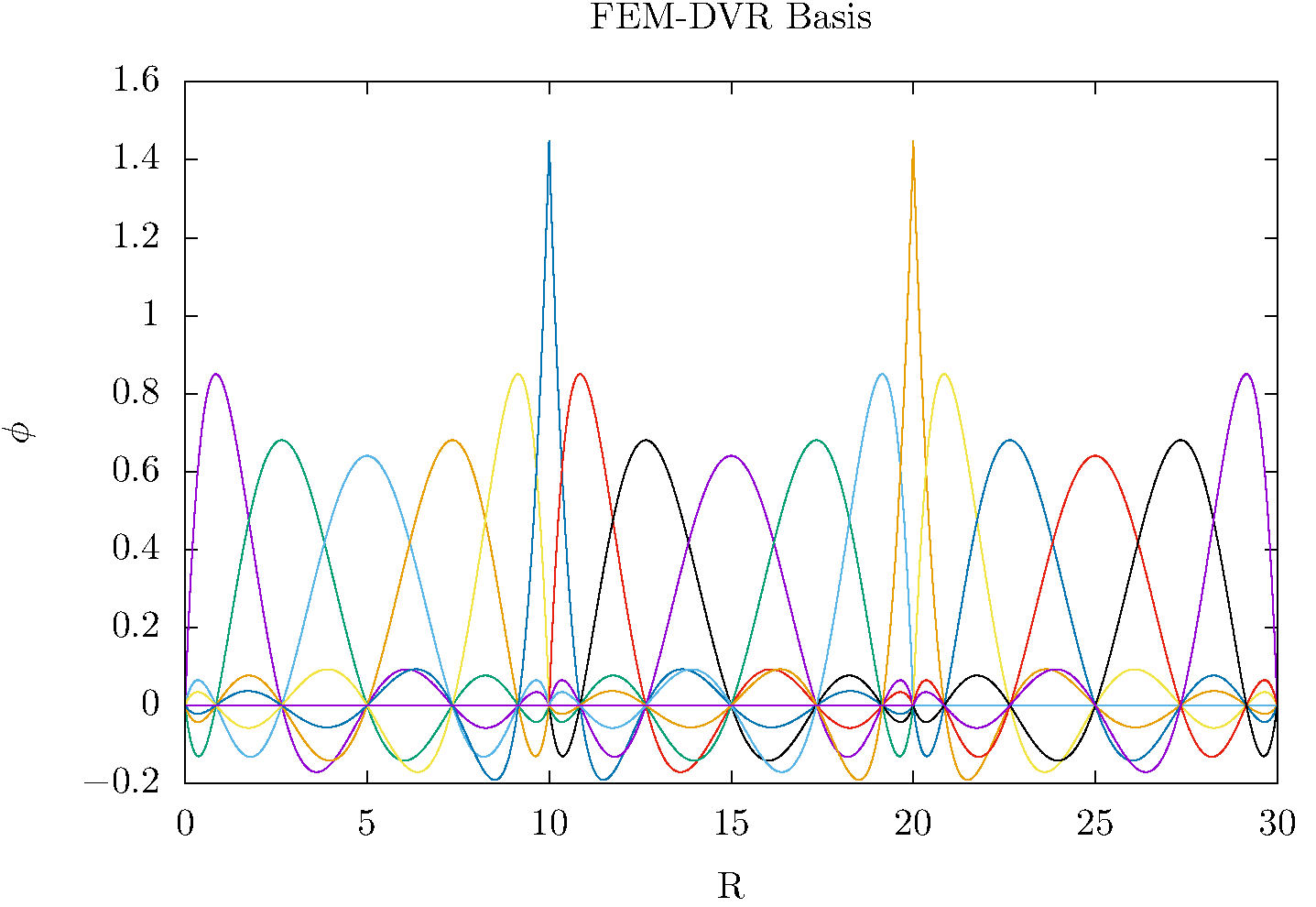

This idea is illustrated in the figures directly above. The bridging functions extend across two elements, while the FEM-DVR basis within each element is nonzero only on that interval.

We can extend this process to create as many elements as we want. So for example, we might use 20th order quadrature in each element and have 100 elements of various sizes cover the entirety of the interval \(a \le x \le b\).

Rescigno and McCurdy~cite{Rescigno_McCurdy2000} give the formulas for the kinetic energy matrix elements for this method, which are still exactly evaluated by the Gauss-Lobatto quadrature(s) over the entire domain of \(x\). The potential matrix elements remain diagonal. The kinetic energy is no longer a full matrix, but has the form of overlapping blocks originating from their finite elements. Schematically we can display the form of the Hamiltonian for four elements as

where there are four blocks in the kinetic energy matrix, the first and last of which are smaller than all the intermediate blocks, because the lack the first and last basis functions that would have been nonzero at the boundaries of the entire interval in \(x\).

The FEM-DVR basis is orthogonal within the overall Gauss-Lobatto quadrature. The kinetic energy matrix is exactly evaluated with the quadrature. The potential energy is only required at the quadrature points,

The DVR representation not variational because both the potential and overlap matrix elements are approximated by the quadrature and are not exact, although with moderately dense grids that property barely noticeable. Also, the FEM-DVR enforces only continuity at the finite element boundaries and not continuity of the derivatives of the wave function. Therefore there is first order error at each finite element boundary. Again, with moderately dense grids the discontinuity in the derivative at the boundaries is very slight and causes no numerical pathologies.

Higher dimensions: Extension of both the DVR and FEM-DVR methods to higher dimensions is straightforward. Suppose we had a problem with \(x\) and \(y\) degrees of freedom. In a product basis of separate DVR basis functions \(\phi_i(x)\) and \(\chi_m(y)\) the kinetic and potential energy matrices are

and extentions to higher dimensions are similar. One only needs the potential evaluated at the quadrature points, and the kinetic energy matrix becomes increasingly sparse as the number of dimensions increases. Scalable algorithms for implementing the FEM-DVR in higher dimensions take advantage of that sparsity, both in storage and numerical operations.

DVRs constructed from other orthogonal polynomials and the notion of a “proper DVR”¶

Orthogonal polynomials tridiagonalize the matrix of the position with the weight with which the orthogonal polynomials are orthogonal

That is an essential property that is derivable from the three-term recursion relations satisfied by all orthogonal polynomials. Every set of orthogonal polynomials defines a quadrature for their values of \(a\), \(b\) and \(W(x)\). For example we can construct a DVR for the interval \(-\infty < x < \infty\) using harmonic oscillator functions that apply the proper bound-state boundary conditions at infinity, and the underlying quadrature will be the Gauss-Hermite quadrature as described in the appendices of reference [6].

If we begin with a real orthogonal basis \(\{\phi_i(x)\}_{i=1}^{N}\) that tridiagonalizes the position operator, \(x\), we can generate a “proper DVR”, in the terminology of the appendices of the review article [6] by Hans Dieter-Meyer and coworkers, as follows.

Diagonalize the matrix of the position operator \(\mathbf{Q}\)

where \(\mathbf{X}\) is the matrix of position eigenvalues \(X_{\alpha,\beta} = x_\alpha \delta_{\alpha,\beta}\)

Transform the exactly evaluated matrix of the kinetic energy, \(\mathbf{T}\) to the basis of position eigenfunctions

Construct the DVR representation of the Hamiltonian as

in which the potential is diagonal, so no matrix elements of it are required.

The DVR basis in which the Hamiltonian is represented by \(H^{DVR}\) is

These functions also provide the “interpolating basis” mentioned below.

Because the original basis tridiagonalized the position operator, we can construct the underlying Gauss quadrature, whose abscissas are \(\{x_\alpha\}_{\alpha=1}^N\), and whose weights are given by

which in this case does not depend on \(k\). If the original basis had not tridiagonalized \(x\) this formula for the weights would have depended on \(k\).

In a proper DVR we can use the properties of the underlying Gauss quadrature to show that the original basis satisfied discrete orthonormality

and discrete completeness

The combination of these two properties means that the the DVR and an expansion of the wave function in the original orthogonal polynomials are exactly equivalent.

Moreover the DVR basis in a proper DVR has the important discrete \(\delta\) -property

This relation tells us that for any function spanned by the original basis we have, using the quadrature,

So that if we find the eigenvectors of \(\mathbf{H}^{DVR}\) or use it in the DVR representation of linear equations like \((E-\mathbf{H}^{DVR})\boldsymbol{\psi} = \mathbf{d}\), the resulting vectors represent the values of \(\psi\) on the gridpoints

Equally important is the fact that the DVR basis provides the interpolating basis whereby we can get \(\psi\) at any value of $x$ via its expansion

Note: If the original basis does not tridiagonalize the position operator, but instead tridiagonalizes an invertable function of \(x\), like \(1/x\) or \((x_0-x)^2\), a proper DVR can be generated by diagonalizing that operator, and writing the abscissas \(x_\alpha\) in terms of the eigenvalues of that operator.

Time propagation using the FEM-DVR and Crank-Nicolson propagator¶

Finite difference in time as a route to approximate solutions of the time-dependent Schrödinger equation¶

The time-dependent Schrödinger equation has been converted by our DVR to a time-dependent matrix equation (\(\hbar = 1\)),

which is of course an initial value problem. The simplest way to treat it is by approximating the derivative by finite difference,

which would allow us to step forward in time with a single matrix multiplication. Unfortunately this idea, known as “forward Euler”, produces an unstable algorithm, whose error, depending on the spectrum of \(\mathbf{H}\) (its eigenvalues) grows exponentially. There are many ways to remedy this problem at the cost of more matrix multiplications per step, and several of them can be derived by using higher order finite difference approximations to first derivative involving more points in time. Standard methods, like the variable time-step Runge-Kutta method, and various “predictor-corrector” methods can be found in the literature and software libraries.

A propagator that has the particularly important advantage for quantum mechanics of being unitary is due to Crank and Nicolson~ [7] (whose original paper was about heat-conduction equations). We can ” derive” it easily by replacing the right hand side by an average of it evaluated at \(t\) and \(t+\Delta\)

We can write the Crank-Nicolson propagator result in two equivalent forms,

Implementing the first relation in Eq. (8) to take a time step requires one matrix multiplication plus a single solution of linear equations. It is one of a class of “implicit” methods that require a solution of linear equations in contrast to “explicit” methods that require only matrix-vector multiplications. Solving the linear equations is the most efficient implementation of the Crank-Nicolson propagator if the Hamiltonian is time dependent, because solving one set of linear equations requires much less computation than inverting a matrix. If the Hamiltonian is not time dependent however we can construct the inverse once and for all and use it to take any number of time steps, which then only require one matrix-vector multiplication each.

A single matrix inversion and matrix-matrix multiplication then allows us to take $n$ time steps with only n matrix-vector multiplications.

Properties of the Crank-Nicolson propagator¶

Unitarity: Crank-Nicolson propagation is unitary, meaning that for each time step the norm of the wave function is conserved. The reason is that the matrix \(\mathbf{U}\) is unitary, including the case that \(H\) is time-dependent. Proof uses the fact that \(H\) is hermitian, \(H^\dagger = H\):

So \(\vec{\psi} (t+\Delta)^\dagger \cdot \vec{\psi}(t+\Delta)= \vec{\psi}(t)^\dagger \mathbf{U}^\dagger \cdot \mathbf{U} \vec{\psi}(t) = \vec{\psi}(t)^\dagger \cdot \vec{\psi}(t)\) and the norm of the wave function is preserved at every step.

Error is third order: The order of the error in the Crank-Nicolson propagator is \(\Delta^3\), as we see by expanding in powers of \(\Delta\) and comparing with the expansion of \(\exp(-i\mathbf{H}\Delta)\),

Stability: The Crank-Nicolson propagator is unconditionally stable, meaning that no matter what the step size and spectrum of \(\mathbf{H}(t)\) the error of the propagation does not increase exponentially as for forward Euler.

References¶

- 1

J V. Lill, Gregory Parker, and John Light. The discrete variable–finite basis approach to quantum scattering. The Journal of Chemical Physics, 85:900–910, 07 1986. doi:10.1063/1.451245.

- 2(1,2,3,4)

Milton Abramowitz and Irene A. Stegun. Handbook of Mathematical Functions with Formulas, Graphs, and Mathematical Tables. Dover, New York, ninth dover printing, tenth gpo printing edition, 1964.

- 3

D.E. Manolopoulos and R.E. Wyatt. Quantum scattering via the log derivative version of the kohn variational principle. Chemical Physics Letters, 152(1):23 – 32, 1988. doi:https://doi.org/10.1016/0009-2614(88)87322-6.

- 4

David E. Manolopoulos, Michael D'Mello, and Robert E. Wyatt. Quantum reactive scattering via the log derivative version of the kohn variational principle: general theory for bimolecular chemical reactions. The Journal of Chemical Physics, 91(10):6096–6102, 1989. doi:10.1063/1.457428.

- 5

T. N. Rescigno and C. W. McCurdy. Numerical grid methods for quantum-mechanical scattering problems. Phys. Rev. A, 62:032706, Aug 2000. doi:10.1103/PhysRevA.62.032706.

- 6(1,2)

M. H. Beck, A. Jäckle, G. A. Worth, and H.-D. Meyer. The multiconfiguration time-dependent Hartree method: A highly efficient algorithm for propagating wavepackets. prep, 324:1–105, 2000.

- 7

J. Crank and P. Nicolson. A practical method for numerical evaluation of solutions of partial differential equations of the heat-conduction type. Advances in Computational Mathematics, 6(1):207–226, Dec 1996. doi:10.1007/BF02127704.